MoMa-LLM

Language-Grounded Dynamic Scene Graphs for

Interactive Object Search with Mobile Manipulation

Daniel Honerkamp*, Martin Büchner*, Fabien Despinoy, Tim Welschehold, Abhinav Valada

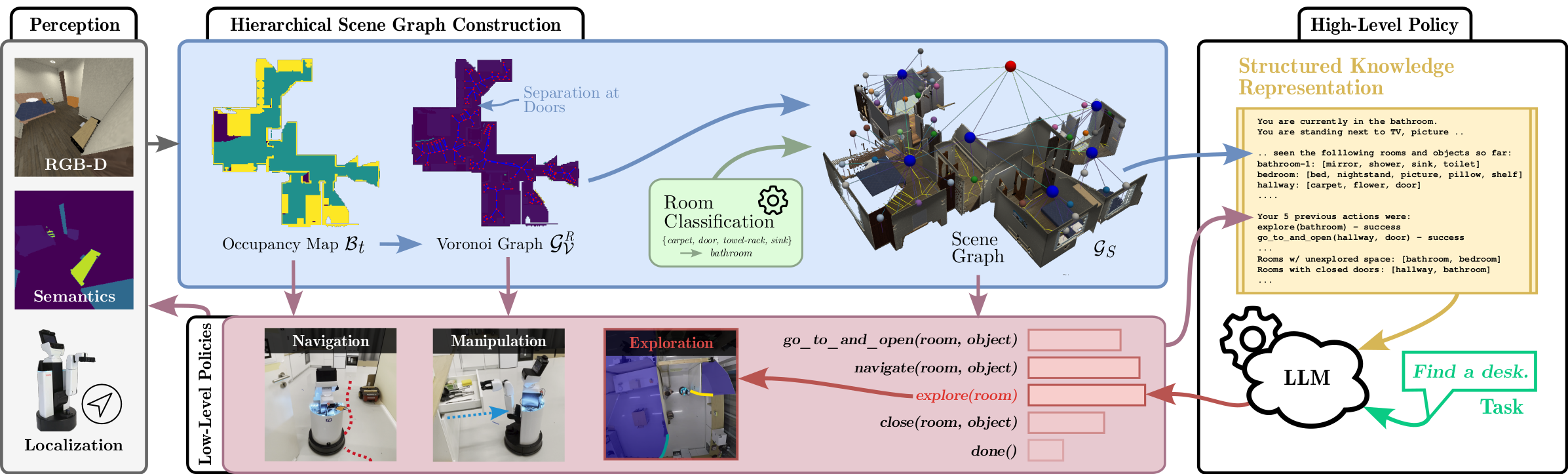

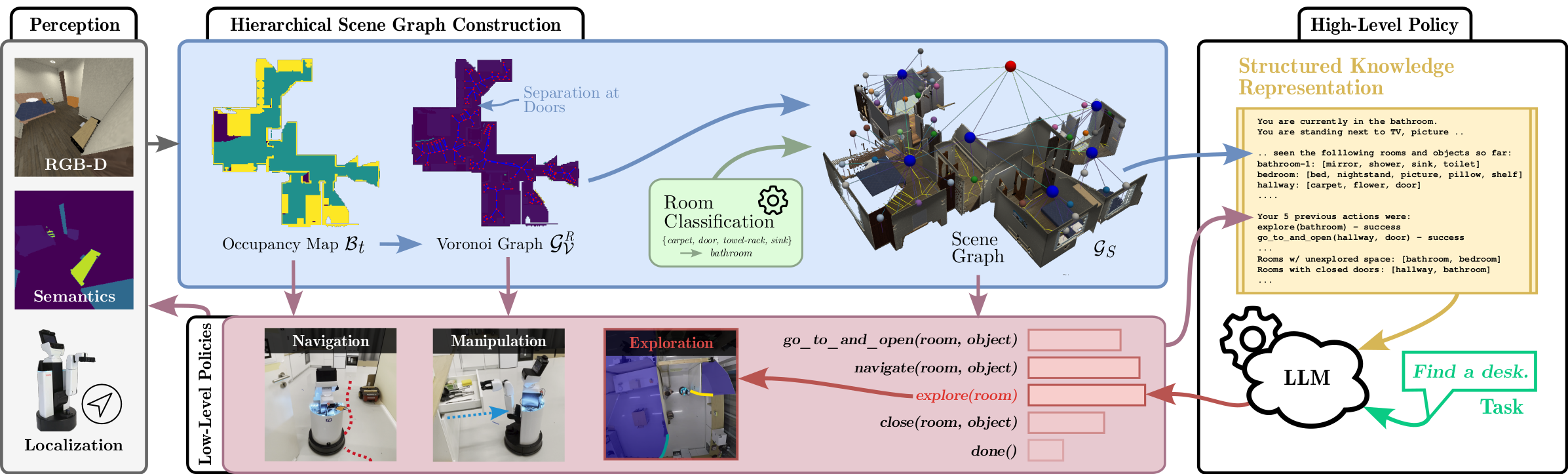

To fully leverage the capabilities of mobile manipulation robots, it is imperative that they are able to autonomously execute long-horizon tasks in large unexplored environments. While large language models (LLMs) have shown emergent reasoning skills on arbitrary tasks, existing work primarily concentrates on explored environments, typically focusing on either navigation or manipulation tasks in isolation. In this work, we propose MoMa-LLM, a novel approach that grounds language models within structured representations derived from open-vocabulary scene graphs, dynamically updated as the environment is explored. We tightly interleave these representations with an object-centric action space. The resulting approach is zero-shot, open-vocabulary, and readily extendable to a spectrum of mobile manipulation and household robotic tasks. We demonstrate the effectiveness of MoMa-LLM in a novel semantic interactive search task in large realistic indoor environments. In extensive experiments in both simulation and the real world, we show substantially improved search efficiency compared to conventional baselines and state-of-the-art approaches, as well as its applicability to more abstract tasks.

We transfer our policy to a four-room real-world apartment comprising a kitchen, a living room, a long hallway, and a bathroom. In the following demos, we demonstrate how MoMa-LLM can be used to solve general interactive object search tasks such as Find a tea. On top of that, we also show how MoMa-LLM can reason about more abstract tasks such as I am hungry. Find me something for breakfast:

This work is released under CC BY-NC-SA license. A software implementation of this project can be found on GitHub.

If you find our work useful, please consider citing our paper:

This work was funded by Toyota Motor Europe (TME) and an academic grant from NVIDIA.